DevLake brings all your DevOps data into one practical, personalized, extensible view. Ingest, analyze, and visualize data from an ever-growing list of developer tools, with our free and open source product.

DevLake is most exciting for developer teams looking to make better sense of their development process and to bring a more data-driven approach to their own practices. With DevLake you can ask your process any question, just connect and query.

Dashboard Screenshot

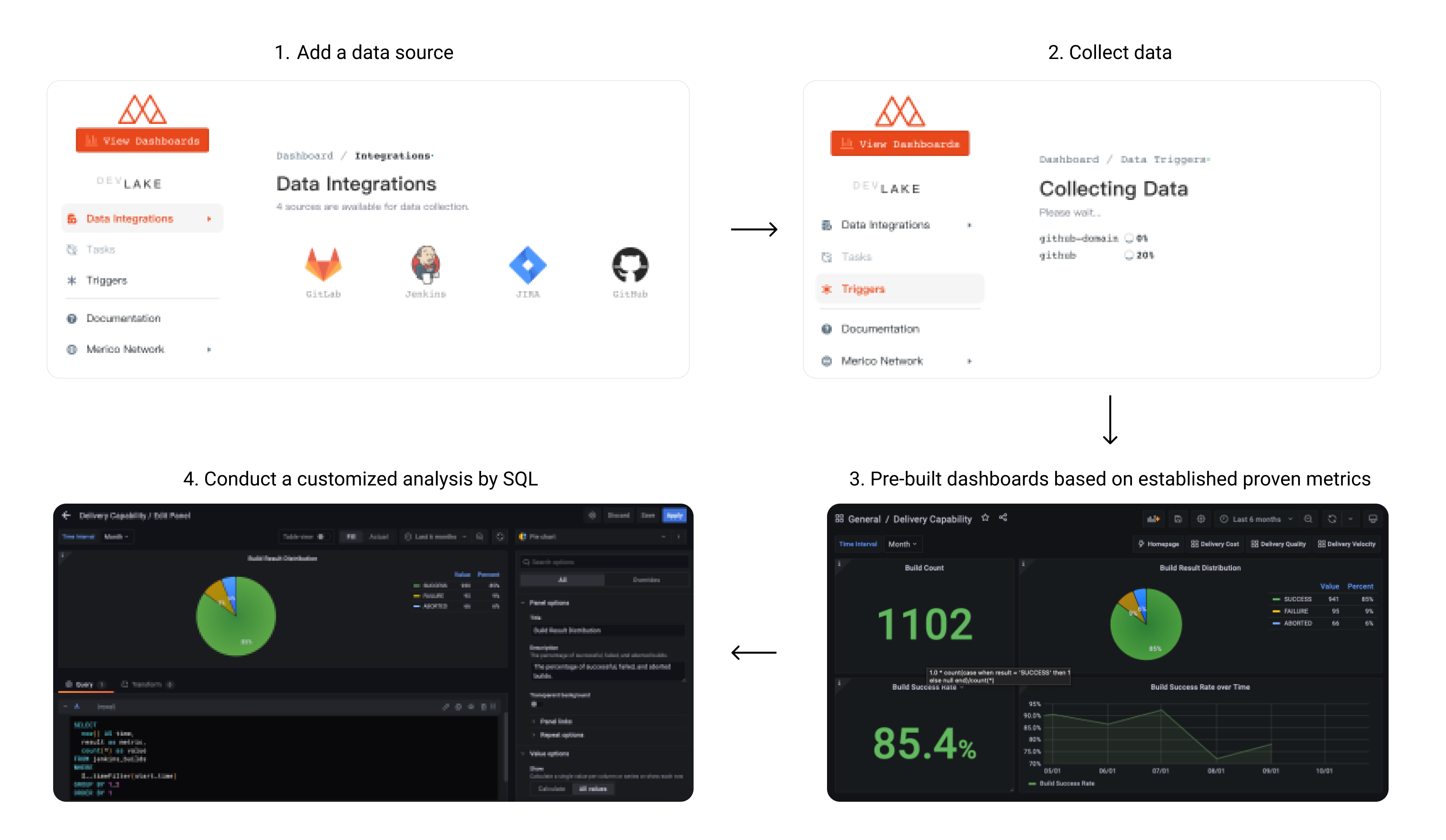

User Flow

Why DevLake?

- Comprehensive understanding of software development lifecycle, digging workflow bottlenecks

- Timely review of team iteration performance, rapid feedback, agile adjustment

- Quickly build scenario-based data dashboards and drill down to analyze the root cause of problems

What can be accomplished with DevLake?

- Collect DevOps performance data for the whole process

- Share abstraction layer with similar tools to output standardized performance data

- Built-in 20+ performance metrics and drill-down analysis capability

- Support custom SQL analysis and drag and drop to build scenario-based data views

- Flexible architecture and plug-in design to support fast access to new data sources

See Demo

Click here to see demo. The demo is based on data from this repo.

Username/Password: test/test

Contents

| Section |

Sub-section |

Description |

Documentation Link |

| Data Sources |

Supported Data Sources |

Links to specific plugin usage & details |

View Section |

| Setup Guide |

User Setup |

Set up Dev Lake locally as a user |

View Section |

| Developer Setup |

Set up development environment locally |

View Section |

| Cloud Setup |

Set up DevLake in the cloud with Tin |

View Section |

| Tests |

Tests |

Commands for running tests |

View Section |

| Make Contribution |

Understand the architecture of DevLake |

See the architecture diagram |

View Section |

| Build a Plugin |

Details on how to make your own plugin |

View Section |

| Add Plugin Metrics |

Guide to add metrics |

View Section |

| Contribution specs |

How to contribute to this repo |

View Section |

| User Guide, Help, and more |

Grafana |

How to visualize the data |

View Section |

| Need Help |

Message us on Discord |

View Section |

| FAQ |

Frequently asked questions by users |

View Section |

| License |

The project license |

View Section |

Data Sources We Currently Support

Below is a list of data source plugins used to collect & enrich data from specific sources. Each has a README.md file with basic setup, troubleshooting, and metrics info.

For more information on building a new data source plugin, see Build a Plugin.

| Section |

Section Info |

Docs |

| Jira |

Summary, Data & Metrics, Configuration, Plugin API |

Link |

| GitLab |

Summary, Data & Metrics, Configuration, Plugin API |

Link |

| Jenkins |

Summary, Data & Metrics, Configuration, Plugin API |

Link |

| GitHub |

Summary, Data & Metrics, Configuration, Plugin API |

Link |

Setup Guide

There're 3 ways to set up DevLake: user setup, developer setup and cloud setup.

User setup

- If you only plan to run the product locally, this is the ONLY section you should need.

- Commands written

like this are to be run in your terminal.

Required Packages to Install

NOTE: After installing docker, you may need to run the docker application and restart your terminal

Commands to run in your terminal

-

Clone repository:

git clone https://github.com/merico-dev/lake.git devlake

cd devlake

cp .env.example .env

-

Start Docker on your machine, then run docker compose up -d to start the services.

-

Visit localhost:4000 to setup configuration files.

- Navigate to desired plugins pages on the Integrations page

- You will need to enter the required information for the plugins you intend to use.

- Please reference the following for more details on how to configure each one:

-> Jira

-> GitLab

-> Jenkins

-> GitHub

- Submit the form to update the values by clicking on the Save Connection button on each form page

devlake takes a while to fully boot up. if config-ui complaining about api being unreachable, please wait a few seconds and try refreshing the page.- To collect this repo for a quick preview, please provide a Github personal token on Data Integrations / Github page.

-

Visit localhost:4000/triggers to trigger data collection.

-

Click View Dashboards button when done (username: admin, password: admin). The button will be shown on the Trigger Collection page when data collection has finished.

Setup cron job

To synchronize data periodically, we provide lake-cli for easily sending data collection requests along with a cron job to periodically trigger the cli tool.

Developer Setup

Requirements

- Docker

- Golang

- Make

- Mac (Already installed)

- Windows: Download

- Ubuntu:

sudo apt-get install build-essential

How to setup dev environment

-

Navigate to where you would like to install this project and clone the repository:

git clone https://github.com/merico-dev/lake.git

cd lake

-

Install Go packages:

make install

-

Copy the sample config file to new local file:

cp .env.example .env

-

Update the following variables in the file .env:

DB_URL: Replace mysql:3306 with 127.0.0.1:3306COMPOSE_PROFILES: Change user to dev

-

Start the MySQL and Grafana containers:

Make sure the Docker daemon is running before this step.

docker compose up

-

Run lake and config UI in dev mode in two seperate terminals:

# run lake

make dev

# run config UI

make configure-dev

-

Visit config UI at localhost:4000 to configure data sources.

- Navigate to desired plugins pages on the Integrations page

- You will need to enter the required information for the plugins you intend to use.

- Please reference the following for more details on how to configure each one:

-> Jira

-> GitLab,

-> Jenkins

-> GitHub

- Submit the form to update the values by clicking on the Save Connection button on each form page

-

Visit localhost:4000/triggers to trigger data collection.

- Please refer to this wiki How to trigger data collection. Data collection can take up to 20 minutes for large projects. (GitLab 10k+ commits or Jira 5k+ issues)

-

Click View Dashboards button when done (username: admin, password: admin). The button is shown in the top left.

Cloud setup

If you want to run DevLake in a clound environment, you can set up DevLake with Tin. See detailed setup guide

Disclaimer:

To protect your information, it is critical for users of the Tin hosting to set passwords to protect DevLake applications. We built DevLake as a self-hosted product, in part to ensure users have total protection and ownership of their data, while the same remains true for the Tin hosting, this risk point can only be eliminated by the end-user.

Tests

To run the tests:

make test

Make Contribution

This section list all the documents to help you contribute to the repo.

Understand the Architecture of DevLake

Architecture Diagram

Add a Plugin

plugins/README.md

Add Plugin Metrics

plugins/HOW-TO-ADD-METRICS.md

Contributing Spec

CONTRIBUTING.md

User Guide, Help and more

Grafana

We use Grafana as a visualization tool to build charts for the data stored in our database. Using SQL queries, we can add panels to build, save, and edit customized dashboards.

All the details on provisioning and customizing a dashboard can be found in the Grafana Doc.

Need help?

Message us on Discord

FAQ

Q: When I run docker-compose up -d I get this error: "qemu: uncaught target signal 11 (Segmentation fault) - core dumped". How do I fix this?

A: M1 Mac users need to download a specific version of docker on their machine. You can find it here.

License

This project is licensed under Apache License 2.0 - see the LICENSE file for details.