Documentation

¶

Documentation

¶

Index ¶

- Variables

- func Finish(fns ...func() error) error

- func FinishVoid(fns ...func())

- func ForEach(generate GenerateFunc, mapper ForEachFunc, opts ...Option)

- func MapReduce(generate GenerateFunc, mapper MapperFunc, reducer ReducerFunc, opts ...Option) (interface{}, error)

- func MapReduceChan(source <-chan interface{}, mapper MapperFunc, reducer ReducerFunc, ...) (interface{}, error)

- func MapReduceVoid(generate GenerateFunc, mapper MapperFunc, reducer VoidReducerFunc, ...) error

- type ForEachFunc

- type GenerateFunc

- type MapFunc

- type MapperFunc

- type Option

- type ReducerFunc

- type VoidReducerFunc

- type Writer

Constants ¶

This section is empty.

Variables ¶

var ( // ErrCancelWithNil is an error that mapreduce was cancelled with nil. ErrCancelWithNil = errors.New("mapreduce cancelled with nil") // ErrReduceNoOutput is an error that reduce did not output a value. ErrReduceNoOutput = errors.New("reduce not writing value") )

Functions ¶

func ForEach ¶

func ForEach(generate GenerateFunc, mapper ForEachFunc, opts ...Option)

ForEach maps all elements from given generate but no output.

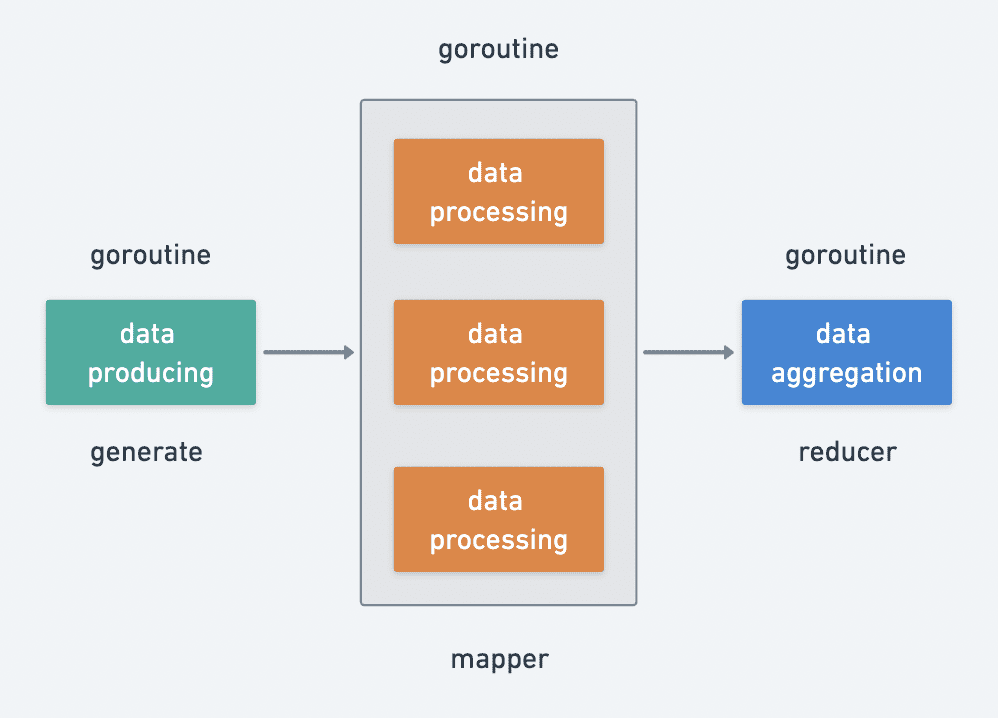

func MapReduce ¶

func MapReduce(generate GenerateFunc, mapper MapperFunc, reducer ReducerFunc, opts ...Option) (interface{}, error)

MapReduce maps all elements generated from given generate func, and reduces the output elements with given reducer.

func MapReduceChan ¶

func MapReduceChan(source <-chan interface{}, mapper MapperFunc, reducer ReducerFunc,

opts ...Option) (interface{}, error)

MapReduceChan maps all elements from source, and reduce the output elements with given reducer.

func MapReduceVoid ¶

func MapReduceVoid(generate GenerateFunc, mapper MapperFunc, reducer VoidReducerFunc, opts ...Option) error

MapReduceVoid maps all elements generated from given generate, and reduce the output elements with given reducer.

Types ¶

type ForEachFunc ¶

type ForEachFunc func(item interface{})

ForEachFunc is used to do element processing, but no output.

type GenerateFunc ¶

type GenerateFunc func(source chan<- interface{})

GenerateFunc is used to let callers send elements into source.

type MapFunc ¶

type MapFunc func(item interface{}, writer Writer)

MapFunc is used to do element processing and write the output to writer.

type MapperFunc ¶

MapperFunc is used to do element processing and write the output to writer, use cancel func to cancel the processing.

type Option ¶

type Option func(opts *mapReduceOptions)

Option defines the method to customize the mapreduce.

func WithContext ¶

WithContext customizes a mapreduce processing accepts a given ctx.

func WithWorkers ¶

WithWorkers customizes a mapreduce processing with given workers.

type ReducerFunc ¶

ReducerFunc is used to reduce all the mapping output and write to writer, use cancel func to cancel the processing.

type VoidReducerFunc ¶

type VoidReducerFunc func(pipe <-chan interface{}, cancel func(error))

VoidReducerFunc is used to reduce all the mapping output, but no output. Use cancel func to cancel the processing.