CubeFS

Community Meeting Community Meeting |

| The CubeFS Project holds bi-weekly community online meeting. To join or watch previous meeting notes and recordings, please see meeting schedule and meeting minutes. |

Note: The master branch may be in an unstable or even broken state during development.

Please use releases instead of the master branch in order to get a stable set of binaries.

Contents

Overview

CubeFS (储宝文件系统 in Chinese) is a cloud-native storage platform that provides both POSIX-compliant and S3-compatible interfaces. It is hosted by the Cloud Native Computing Foundation (CNCF) as a sandbox project.

CubeFS has been commonly used as the underlying storage infrastructure for online applications, database or data processing services and machine learning jobs orchestrated by Kubernetes.

An advantage of doing so is to separate storage from compute - one can scale up or down based on the workload and independent of the other, providing total flexibility in matching resources to the actual storage and compute capacity required at any given time.

Some key features of CubeFS include:

-

Scale-out metadata management

-

Strong replication consistency

-

Specific performance optimizations for large/small files and sequential/random writes

-

Multi-tenancy

-

POSIX-compatible and mountable

-

S3-compatible object storage interface

We are committed to making CubeFS better and more mature. Please stay tuned.

Documents

English version: https://cubefs.readthedocs.io/en/latest/

Chinese version: https://cubefs.readthedocs.io/zh_CN/latest/

Benchmark

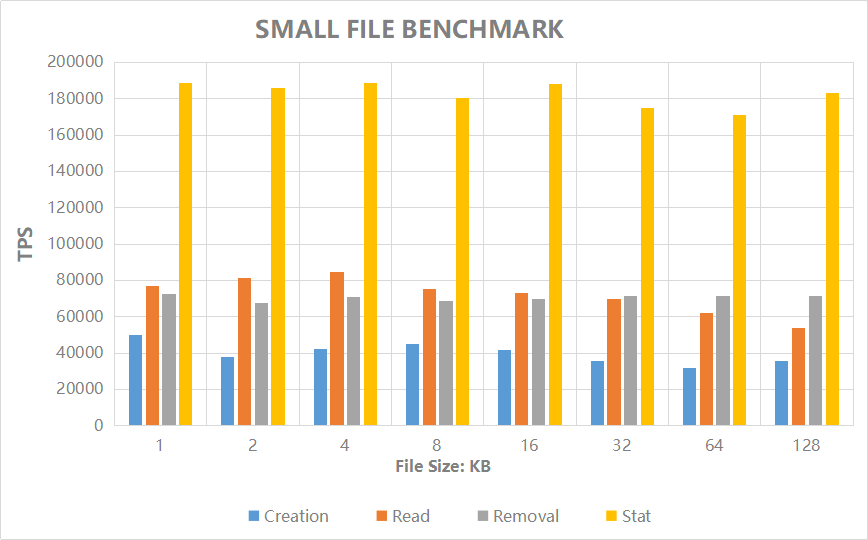

Small file operation performance and scalability benchmark test by mdtest.

| File Size (KB) |

1 |

2 |

4 |

8 |

16 |

32 |

64 |

128 |

| Creation (TPS) |

70383 |

70383 |

73738 |

74617 |

69479 |

67435 |

47540 |

27147 |

| Read (TPS) |

108600 |

118193 |

118346 |

122975 |

116374 |

110795 |

90462 |

62082 |

| Removal (TPS) |

87648 |

84651 |

83532 |

79279 |

85498 |

86523 |

80946 |

84441 |

| Stat (TPS) |

231961 |

263270 |

264207 |

252309 |

240244 |

244906 |

273576 |

242930 |

Refer to cubefs.readthedocs.io for performance and scalability of IO and Metadata.

Build CubeFS

Prerequisite:

- Go version

>=1.16

export GO111MODULE=off

Build for x86

$ git clone http://github.com/cubefs/cubefs.git

$ cd cubefs

$ make

Build for arm64

For example,the current cubefs directory is /root/arm64/cubefs,build.sh will auto download follow source codes to vendor/dep directory :

bzip2-1.0.6 lz4-1.9.2 zlib-1.2.11 zstd-1.4.5

gcc version as v4 or v5:

cd /root/arm64/cubefs export CPUTYPE=arm64_gcc4 && bash ./build.sh

gcc version as v9 :

export CPUTYPE=arm64_gcc9 && bash ./build.sh

Also support cross compiler with docker:

gcc version as v4, support Ububtu 14.04 and up version,CentOS7.6 and up version. Check libstdc++.so.6 version must more than `GLIBCXX_3.4.19',if fail please update libstdc++.

cd /root/arm64/cubefs

docker build --rm --tag arm64_gcc4_golang1_13_ubuntu_14_04_cubefs ./build/compile/arm64/gcc4

make dist-clean

docker run -v /root/arm64/cubefs:/root/cubefs arm64_gcc4_golang1_13_ubuntu_14_04_cubefs /root/buildcfs.sh

Remove image:

docker image remove -f arm64_gcc4_golang1_13_ubuntu_14_04_cubefs

The list of RPM packages dependencies can be installed with:

$ yum install http://storage.jd.com/chubaofsrpm/latest/cfs-install-latest-el7.x86_64.rpm

$ cd /cfs/install

$ tree -L 2

.

├── install_cfs.yml

├── install.sh

├── iplist

├── src

└── template

├── client.json.j2

├── create_vol.sh.j2

├── datanode.json.j2

├── grafana

├── master.json.j2

└── metanode.json.j2

Set parameters of the CubeFS cluster in iplist.

-

[master], [datanode], [metanode], [monitor], [client] modules define IP addresses of each role.

-

#datanode config module defines parameters of DataNodes. datanode_disks defines path and reserved space separated by ":". The path is where the data store in, so make sure it exists and has at least 30GB of space; reserved space is the minimum free space(Bytes) reserved for the path.

-

[cfs:vars] module defines parameters for SSH connection. So make sure the port, username and password for SSH connection is unified before start.

-

#metanode config module defines parameters of MetaNodes. metanode_totalMem defines the maximum memory(Bytes) can be use by MetaNode process.

[master]

10.196.0.1

10.196.0.2

10.196.0.3

[datanode]

...

[cfs:vars]

ansible_ssh_port=22

ansible_ssh_user=root

ansible_ssh_pass="password"

...

#datanode config

...

datanode_disks = '"/data0:10737418240","/data1:10737418240"'

...

#metanode config

...

metanode_totalMem = "28589934592"

...

For more configurations please refer to documentation.

Start the resources of CubeFS cluster with script install.sh. (make sure the Master is started first)

$ bash install.sh -h

Usage: install.sh -r | --role [datanode | metanode | master | objectnode | console | monitor | client | all | createvol ] [2.1.0 or latest]

$ bash install.sh -r master

$ bash install.sh -r metanode

$ bash install.sh -r datanode

$ bash install.sh -r monitor

$ bash install.sh -r client

$ bash install.sh -r console

Check mount point at /cfs/mountpoint on client node defined in iplist.

Open http://[the IP of console system] through a browser for web console system(the IP of console system is defined in iplist). In console default user is root, password is CubeFSRoot. In monitor default user is admin,password is 123456.

Run a CubeFS Cluster within Docker

A helper tool called run_docker.sh (under the docker directory) has been provided to run CubeFS with docker-compose.

$ docker/run_docker.sh -r -d /data/disk

Note that /data/disk can be any directory but please make sure it has at least 10G available space.

To check the mount status, use the mount command in the client docker shell:

$ mount | grep cubefs

To view grafana monitor metrics, open http://127.0.0.1:3000 in browser and login with admin/123456.

To run server and client separately, use the following commands:

$ docker/run_docker.sh -b

$ docker/run_docker.sh -s -d /data/disk

$ docker/run_docker.sh -c

$ docker/run_docker.sh -m

For more usage:

$ docker/run_docker.sh -h

Helm chart to Run a CubeFS Cluster in Kubernetes

The cubefs-helm repository can help you deploy CubeFS cluster quickly in containers orchestrated by kubernetes.

Kubernetes 1.12+ and Helm 3 are required. cubefs-helm has already integrated CubeFS CSI plugin

Download cubefs-helm

$ git clone https://github.com/cubefs/cubefs-helm

$ cd cubefs-helm

Copy kubeconfig file

CubeFS CSI driver will use client-go to connect the Kubernetes API Server. First you need to copy the kubeconfig file to cubefs-helm/cubefs/config/ directory, and rename to kubeconfig

$ cp ~/.kube/config cubefs/config/kubeconfig

Create configuration yaml file

Create a cubefs.yaml file, and put it in a user-defined path. Suppose this is where we put it.

$ cat ~/cubefs.yaml

path:

data: /cubefs/data

log: /cubefs/log

datanode:

disks:

- /data0:21474836480

- /data1:21474836480

metanode:

total_mem: "26843545600"

provisioner:

kubelet_path: /var/lib/kubelet

Note that cubefs-helm/cubefs/values.yaml shows all the config parameters of CubeFS.

The parameters path.data and path.log are used to store server data and logs, respectively.

Add labels to Kubernetes node

You should tag each Kubernetes node with the appropriate labels accorindly for server node and CSI node of CubeFS.

kubectl label node <nodename> cubefs-master=enabled

kubectl label node <nodename> cubefs-metanode=enabled

kubectl label node <nodename> cubefs-datanode=enabled

kubectl label node <nodename> cubefs-csi-node=enabled

Deploy CubeFS cluster

$ helm install cubefs ./cubefs -f ~/cubefs.yaml

Reference

Haifeng Liu, et al., CFS: A Distributed File System for Large Scale Container Platforms. SIGMOD‘19, June 30-July 5, 2019, Amsterdam, Netherlands.

For more information, please refer to https://dl.acm.org/citation.cfm?doid=3299869.3314046 and https://arxiv.org/abs/1911.03001

Contributing

Recommend the standard GitHub flow based on forking and pull requests.

See CONTRIBUTING.md for detail.

Reporting a security vulnerability

See security disclosure process for detail.

Partners and Users

For a list of users and success stories see ADOPTERS.md.

License

CubeFS is licensed under the Apache License, Version 2.0.

For detail see LICENSE and NOTICE.