README

¶

README

¶

faas-netes

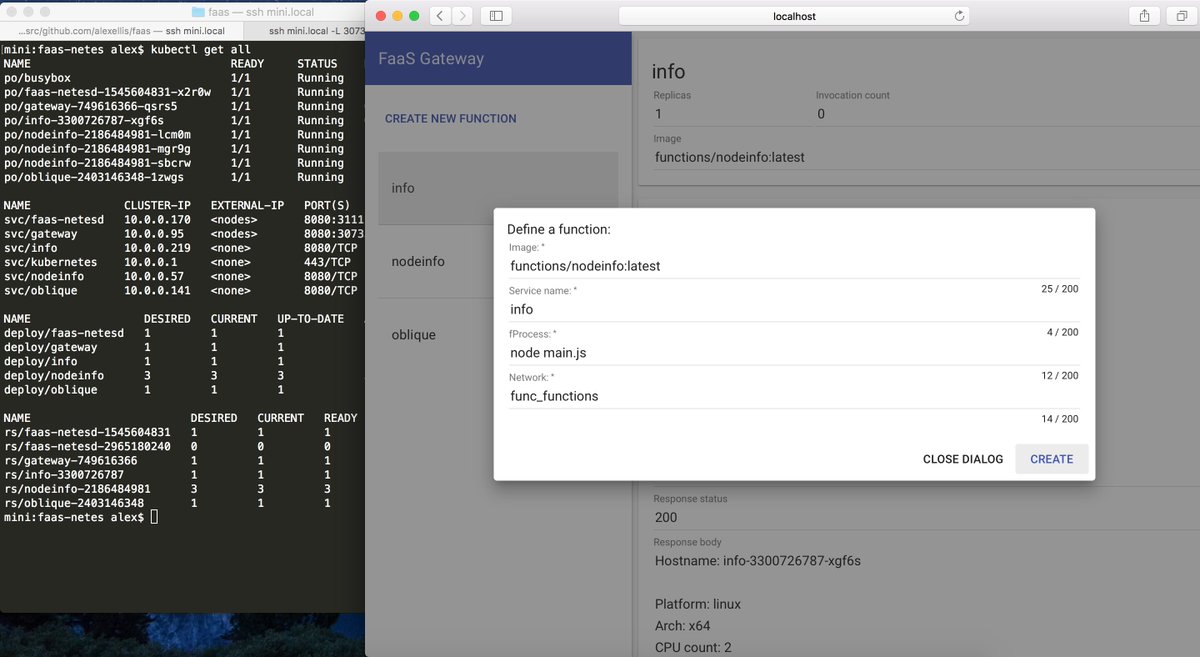

This is a plugin to enable Kubernetes as an OpenFaaS backend. The existing CLI and UI are fully compatible. It also opens up the possibility for other plugins to be built for orchestation frameworks such as Nomad, Mesos/Marathon or even a cloud-managed back-end such as Hyper.sh or Azure ACI.

Update: Watch the demo and intro to the CNCF Serverless Workgroup

OpenFaaS is an event-driven serverless framework for containers. Any container for Windows or Linux can be leveraged as a serverless function. OpenFaaS is quick and easy to deploy (less than 60 secs) and lets you avoid writing boiler-plate code.

In this README you'll find a technical overview and instructions for deploying FaaS on a Kubernetes cluster.

Docker Swarm is also supported.

- Serverless framework for containers

- Native Kubernetes integrations (API and ecosystem)

- Built-in UI

- YAML templates & helm chart

- Over 9k GitHub stars

- Independent open-source project with 70 authors/contributors

You can watch my intro from the Dockercon closing keynote with Alexa, Twitter and Github demos or a complete walk-through of FaaS-netes showing Prometheus, auto-scaling, the UI and CLI in action.

If you'd like to know more about the OpenFaaS project head over to - https://github.com/openfaas/faas

Get started

If you're looking to just get OpenFaaS deployed on Kubernetes follow the OpenFaaS and Kubernetes Deployment Guide or read on for a technical overview.

To try our helm chart click here: helm guide.

How is this project different from others?

Reference guide

Configuration

FaaS-netes can be configured via environment variables.

Environmental variables:

| Option | Usage |

|---|---|

enable_function_readiness_probe |

Boolean - enable a readiness probe to test functions. Default: true |

write_timeout |

HTTP timeout for writing a response body from your function (in seconds). Default: 8 |

read_timeout |

HTTP timeout for reading the payload from the client caller (in seconds). Default: 8 |

Readiness checking

The readiness checking for functions assumes you are using our function watchdog which writes a .lock file in the default "tempdir" within a container. To see this in action you can delete the .lock file in a running Pod with kubectl exec and the function will be re-scheduled.

Namespaces

By default all OpenFaaS functions and services are deployed to the default namespace. To use a separate namespace for functions and the services use the Helm chart.

Asynchronous processing

To enable asynchronous processing use the helm chart configuration.

Technical overview

The code in this repository is a daemon or micro-service which can provide the basic functionality the FaaS Gateway requires:

- List functions

- Deploy function

- Delete function

- Invoke function synchronously

Any other metrics or UI components will be maintained separately in the main OpenFaaS project.

Motivation for separate micro-service:

- Kubernetes go-client is 41MB with only a few lines of code

- After including the go-client the code takes > 2mins to compile

So rather than inflating the original project's source-code this micro-service will act as a co-operator or plug-in. Some additional changes will be needed in the main OpenFaaS project to switch between implementations.

There is no planned support for dual orchestrators - i.e. Swarm and K8s at the same time on the same host/network.

Get involved

Please Star the FaaS and FaaS-netes Github repo.

Contributions are welcome - see the contributing guide for OpenFaaS.

The OpenFaaS complete walk-through on Kubernetes Video shows how to use Prometheus and the auto-scaling in action.

Explore OpenFaaS / FaaS-netes with minikube

These instructions may be out of sync with the latest changes. If you're looking to just get OpenFaaS deployed on Kubernetes follow the OpenFaaS and Kubernetes Deployment Guide.

Let's try it out:

- Create a single-node cluster on our Mac

- Deploy a function with the

faas-cli - Deploy OpenFaaS with helm

- Make calls to list the functions and invoke a function

I'll give instructions for creating your cluster on a Mac with minikube, but you can also use kubeadm on Linux in the cloud by following this tutorial.

Begin the Official tutorial on Medium

Appendix

Auto-scale your functions

Given enough load (> 5 requests/second) OpenFaaS will auto-scale your service, you can test this out by opening up the Prometheus web-page and then generating load with Apache Bench or a while/true/curl bash loop.

Here's an example you can use to generate load:

ip=$(minikube ip); while [ true ] ; do curl $ip:31112/function/nodeinfo -d "" ; done

Prometheus is exposed on a NodePort of 31119 which shows the function invocation rate.

$ open http://$(minikube ip):31119/

Here's an example for use with the Prometheus UI:

rate(gateway_function_invocation_total[20s])

It shows the rate the function has been invoked over a 20 second window.

The OpenFaaS complete walk-through on Kubernetes Video shows auto-scaling in action and how to use the Prometheus UI.

Test out the UI

You can also access the OpenFaaS UI through the node's IP address and the NodePort we exposed earlier.

$ open http://$(minikube ip):31112/

If you've ever used the Kubernetes dashboard then this UI is a similar concept. You can list, invoke and create new functions.

Documentation

¶

Documentation

¶

There is no documentation for this package.