Documentation

¶

Documentation

¶

Overview ¶

Copyright 2019 The Swarm Authors This file is part of the Swarm library.

The Swarm library is free software: you can redistribute it and/or modify it under the terms of the GNU Lesser General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

The Swarm library is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU Lesser General Public License for more details.

You should have received a copy of the GNU Lesser General Public License along with the Swarm library. If not, see <http://www.gnu.org/licenses/>.

Index ¶

- Constants

- Variables

- func Label(e *entry) string

- func LogAddrs(nns [][]byte) string

- func NewCapabilityIndex(c capability.Capability) *capabilityIndex

- func NewDefaultIndex() *capabilityIndex

- func NewEnode(params *EnodeParams) (*enode.Node, error)

- func NewEnodeRecord(params *EnodeParams) (*enr.Record, error)

- func NewPeerPotMap(neighbourhoodSize int, addrs [][]byte) map[string]*PeerPot

- func PrivateKeyToBzzKey(prvKey *ecdsa.PrivateKey) []byte

- type Bzz

- func (b *Bzz) APIs() []rpc.API

- func (b *Bzz) GetOrCreateHandshake(peerID enode.ID) (*HandshakeMsg, bool)

- func (b *Bzz) NodeInfo() interface{}

- func (b *Bzz) Protocols() []p2p.Protocol

- func (b *Bzz) RunProtocol(spec *protocols.Spec, run func(*BzzPeer) error) func(*p2p.Peer, p2p.MsgReadWriter) error

- func (b *Bzz) Stop() error

- func (b *Bzz) UpdateLocalAddr(byteaddr []byte) *BzzAddr

- type BzzAddr

- func (a *BzzAddr) Address() []byte

- func (b *BzzAddr) DecodeRLP(s *rlp.Stream) error

- func (b *BzzAddr) EncodeRLP(w io.Writer) error

- func (a *BzzAddr) ID() enode.ID

- func (a *BzzAddr) Over() []byte

- func (a *BzzAddr) ShortOver() string

- func (a *BzzAddr) ShortString() string

- func (a *BzzAddr) ShortUnder() string

- func (a *BzzAddr) String() string

- func (a *BzzAddr) Under() []byte

- func (a *BzzAddr) Update(na *BzzAddr) *BzzAddr

- func (b *BzzAddr) WithCapabilities(c *capability.Capabilities) *BzzAddr

- type BzzConfig

- type BzzPeer

- type ENRAddrEntry

- type ENRBootNodeEntry

- type EnodeParams

- type HandshakeMsg

- type Health

- type Hive

- func (h *Hive) NodeInfo() interface{}

- func (h *Hive) NotifyDepth(depth uint8)

- func (h *Hive) NotifyPeer(p *BzzAddr)

- func (h *Hive) Peer(id enode.ID) *BzzPeer

- func (h *Hive) PeerInfo(id enode.ID) interface{}

- func (h *Hive) Run(p *BzzPeer) error

- func (h *Hive) Start(server *p2p.Server) error

- func (h *Hive) Stop() error

- type HiveParams

- type KadParams

- type Kademlia

- func (k *Kademlia) BaseAddr() []byte

- func (k *Kademlia) EachAddr(base []byte, o int, f func(*BzzAddr, int) bool)

- func (k *Kademlia) EachAddrFiltered(base []byte, capKey string, o int, f func(*BzzAddr, int) bool) error

- func (k *Kademlia) EachBinDesc(base []byte, minProximityOrder int, consumer PeerBinConsumer)

- func (k *Kademlia) EachBinDescFiltered(base []byte, capKey string, minProximityOrder int, consumer PeerBinConsumer) error

- func (k *Kademlia) EachConn(base []byte, o int, f func(*Peer, int) bool)

- func (k *Kademlia) EachConnFiltered(base []byte, capKey string, o int, f func(*Peer, int) bool) error

- func (k *Kademlia) GetHealthInfo(pp *PeerPot) *Health

- func (k *Kademlia) IsClosestTo(addr []byte, filter func(*BzzPeer) bool) (closest bool)

- func (k *Kademlia) IsWithinDepth(addr []byte) bool

- func (k *Kademlia) KademliaInfo() KademliaInfo

- func (k *Kademlia) NeighbourhoodDepth() int

- func (k *Kademlia) NeighbourhoodDepthCapability(s string) (int, error)

- func (k *Kademlia) Off(p *Peer)

- func (k *Kademlia) On(p *Peer) (uint8, bool)

- func (k *Kademlia) Register(peers ...*BzzAddr) error

- func (k *Kademlia) RegisterCapabilityIndex(s string, c capability.Capability) error

- func (k *Kademlia) Saturation() int

- func (k *Kademlia) String() string

- func (k *Kademlia) SubscribeToNeighbourhoodDepthChange() (c <-chan struct{}, unsubscribe func())

- func (k *Kademlia) SubscribeToPeerChanges() *pubsubchannel.Subscription

- func (k *Kademlia) SuggestPeer() (suggestedPeer *BzzAddr, saturationDepth int, changed bool)

- type KademliaBackend

- type KademliaInfo

- type KademliaLoadBalancer

- func (klb *KademliaLoadBalancer) EachBinDesc(base []byte, consumeBin LBBinConsumer)

- func (klb *KademliaLoadBalancer) EachBinFiltered(base []byte, capKey string, consumeBin LBBinConsumer) error

- func (klb *KademliaLoadBalancer) EachBinNodeAddress(consumeBin LBBinConsumer)

- func (klb *KademliaLoadBalancer) Stop()

- type LBBin

- type LBBinConsumer

- type LBPeer

- type Peer

- type PeerBin

- type PeerBinConsumer

- type PeerConsumer

- type PeerIterator

- type PeerPot

Constants ¶

const (

DefaultNetworkID = 4

)

Variables ¶

var BzzSpec = &protocols.Spec{ Name: "bzz", Version: 14, MaxMsgSize: 10 * 1024 * 1024, Messages: []interface{}{ HandshakeMsg{}, }, }

BzzSpec is the spec of the generic swarm handshake

var (

CapabilityID = capability.CapabilityID(0)

)

var DefaultTestNetworkID = rand.Uint64()

var DiscoverySpec = &protocols.Spec{

Name: "hive",

Version: 11,

MaxMsgSize: 10 * 1024 * 1024,

Messages: []interface{}{

peersMsg{},

subPeersMsg{},

},

}

DiscoverySpec is the spec for the bzz discovery subprotocols

var Pof = pot.DefaultPof(256)

Functions ¶

func NewCapabilityIndex ¶ added in v0.5.0

func NewCapabilityIndex(c capability.Capability) *capabilityIndex

NewCapabilityIndex creates a new capability index with a copy the provided capabilities array

func NewDefaultIndex ¶ added in v0.5.3

func NewDefaultIndex() *capabilityIndex

NewDefaultIndex creates a new index for no capability

func NewEnode ¶

func NewEnode(params *EnodeParams) (*enode.Node, error)

NewEnode creates a new enode object for the given parameters

func NewEnodeRecord ¶

func NewEnodeRecord(params *EnodeParams) (*enr.Record, error)

NewEnodeRecord creates a new valid swarm node ENR record from the given parameters

func NewPeerPotMap ¶

NewPeerPotMap creates a map of pot record of *BzzAddr with keys as hexadecimal representations of the address. the NeighbourhoodSize of the passed kademlia is used used for testing only TODO move to separate testing tools file

func PrivateKeyToBzzKey ¶

func PrivateKeyToBzzKey(prvKey *ecdsa.PrivateKey) []byte

PrivateKeyToBzzKey create a swarm overlay address from the given private key

Types ¶

type Bzz ¶

Bzz is the swarm protocol bundle

func NewBzz ¶

func NewBzz(config *BzzConfig, kad *Kademlia, store state.Store, streamerSpec, retrievalSpec *protocols.Spec, streamerRun, retrievalRun func(*BzzPeer) error) *Bzz

NewBzz is the swarm protocol constructor arguments * bzz config * overlay driver * peer store

func (*Bzz) APIs ¶

APIs returns the APIs offered by bzz * hive Bzz implements the node.Service interface

func (*Bzz) GetOrCreateHandshake ¶

func (b *Bzz) GetOrCreateHandshake(peerID enode.ID) (*HandshakeMsg, bool)

GetHandshake returns the bzz handhake that the remote peer with peerID sent

func (*Bzz) NodeInfo ¶

func (b *Bzz) NodeInfo() interface{}

NodeInfo returns the node's overlay address

func (*Bzz) Protocols ¶

Protocols return the protocols swarm offers Bzz implements the node.Service interface * handshake/hive * discovery

func (*Bzz) RunProtocol ¶

func (b *Bzz) RunProtocol(spec *protocols.Spec, run func(*BzzPeer) error) func(*p2p.Peer, p2p.MsgReadWriter) error

RunProtocol is a wrapper for swarm subprotocols returns a p2p protocol run function that can be assigned to p2p.Protocol#Run field arguments:

- p2p protocol spec

- run function taking BzzPeer as argument this run function is meant to block for the duration of the protocol session on return the session is terminated and the peer is disconnected

the protocol waits for the bzz handshake is negotiated the overlay address on the BzzPeer is set from the remote handshake

func (*Bzz) UpdateLocalAddr ¶

UpdateLocalAddr updates underlayaddress of the running node

type BzzAddr ¶

type BzzAddr struct {

OAddr []byte

UAddr []byte

Capabilities *capability.Capabilities

}

BzzAddr implements the PeerAddr interface

func NewBzzAddr ¶ added in v0.5.0

NewBzzAddr creates a new BzzAddr with the specified byte values for over- and underlayaddresses It will contain an empty capabilities object

func NewBzzAddrFromEnode ¶ added in v0.5.0

NewBzzAddrFromEnode creates a BzzAddr where the overlay address is the byte representation of the enode i It is only used for test purposes TODO: This method should be replaced by (optionally deterministic) generation of addresses using NewEnode and PrivateKeyToBzzKey

func RandomBzzAddr ¶ added in v0.5.0

func RandomBzzAddr() *BzzAddr

RandomBzzAddr is a utility method generating a private key and corresponding enode id It in turn calls NewBzzAddrFromEnode to generate a corresponding overlay address from enode

func (*BzzAddr) ShortOver ¶ added in v0.5.5

ShortOver returns shortened version of Overlay address It can be used for logging

func (*BzzAddr) ShortString ¶ added in v0.5.5

ShortString returns shortened versions of overlay and underlay address in a format: shortOver:shortUnder It can be used for logging

func (*BzzAddr) ShortUnder ¶ added in v0.5.5

ShortUnder returns shortened version of Underlay address It can be used for logging

func (*BzzAddr) WithCapabilities ¶ added in v0.5.0

func (b *BzzAddr) WithCapabilities(c *capability.Capabilities) *BzzAddr

WithCapabilities is a chained constructor method to set the capabilities array for a BzzAddr

type BzzConfig ¶

type BzzConfig struct {

Address *BzzAddr

HiveParams *HiveParams

NetworkID uint64

LightNode bool // temporarily kept as we still only define light/full on operational level

BootnodeMode bool

SyncEnabled bool

}

BzzConfig captures the config params used by the hive

type BzzPeer ¶

type BzzPeer struct {

*protocols.Peer // represents the connection for online peers

*BzzAddr // remote address -> implements Addr interface = protocols.Peer

// contains filtered or unexported fields

}

BzzPeer is the bzz protocol view of a protocols.Peer (itself an extension of p2p.Peer) implements the Peer interface and all interfaces Peer implements: Addr, OverlayPeer

func NewBzzPeer ¶

type ENRAddrEntry ¶

type ENRAddrEntry struct {

// contains filtered or unexported fields

}

ENRAddrEntry is the entry type to store the bzz key in the enode

func NewENRAddrEntry ¶

func NewENRAddrEntry(addr []byte) *ENRAddrEntry

func (ENRAddrEntry) Address ¶

func (b ENRAddrEntry) Address() []byte

type ENRBootNodeEntry ¶

type ENRBootNodeEntry bool

func (ENRBootNodeEntry) ENRKey ¶

func (b ENRBootNodeEntry) ENRKey() string

type EnodeParams ¶

type EnodeParams struct {

PrivateKey *ecdsa.PrivateKey

EnodeKey *ecdsa.PrivateKey

Lightnode bool

Bootnode bool

}

EnodeParams contains the parameters used to create new Enode Records

type HandshakeMsg ¶

type HandshakeMsg struct {

Version uint64

NetworkID uint64

Addr *BzzAddr

// contains filtered or unexported fields

}

Handshake

* Version: 8 byte integer version of the protocol * NetworkID: 8 byte integer network identifier * Addr: the address advertised by the node including underlay and overlay connecctions * Capabilities: the capabilities bitvector

func (*HandshakeMsg) String ¶

func (bh *HandshakeMsg) String() string

String pretty prints the handshake

type Health ¶

type Health struct {

KnowNN bool // whether node knows all its neighbours

CountKnowNN int // amount of neighbors known

MissingKnowNN [][]byte // which neighbours we should have known but we don't

ConnectNN bool // whether node is connected to all its neighbours

CountConnectNN int // amount of neighbours connected to

MissingConnectNN [][]byte // which neighbours we should have been connected to but we're not

// Saturated: if in all bins < depth number of connections >= MinBinsize or,

// if number of connections < MinBinSize, to the number of available peers in that bin

Saturated bool

Hive string

}

Health state of the Kademlia used for testing only

type Hive ¶

type Hive struct {

*HiveParams // settings

*Kademlia // the overlay connectiviy driver

Store state.Store // storage interface to save peers across sessions

// contains filtered or unexported fields

}

Hive manages network connections of the swarm node

func NewHive ¶

func NewHive(params *HiveParams, kad *Kademlia, store state.Store) *Hive

NewHive constructs a new hive HiveParams: config parameters Kademlia: connectivity driver using a network topology StateStore: to save peers across sessions

func (*Hive) NodeInfo ¶

func (h *Hive) NodeInfo() interface{}

NodeInfo function is used by the p2p.server RPC interface to display protocol specific node information

func (*Hive) NotifyDepth ¶ added in v0.5.3

NotifyDepth sends a message to all connections if depth of saturation is changed

func (*Hive) NotifyPeer ¶ added in v0.5.3

NotifyPeer informs all peers about a newly added node

func (*Hive) Peer ¶

Peer returns a bzz peer from the Hive. If there is no peer with the provided enode id, a nil value is returned.

func (*Hive) PeerInfo ¶

PeerInfo function is used by the p2p.server RPC interface to display protocol specific information any connected peer referred to by their NodeID

type HiveParams ¶

type HiveParams struct {

Discovery bool // if want discovery of not

DisableAutoConnect bool // this flag disables the auto connect loop

PeersBroadcastSetSize uint8 // how many peers to use when relaying

MaxPeersPerRequest uint8 // max size for peer address batches

KeepAliveInterval time.Duration

}

HiveParams holds the config options to hive

func NewHiveParams ¶

func NewHiveParams() *HiveParams

NewHiveParams returns hive config with only the

type KadParams ¶

type KadParams struct {

// adjustable parameters

MaxProxDisplay int // number of rows the table shows

NeighbourhoodSize int // nearest neighbour core minimum cardinality

MinBinSize int // minimum number of peers in a row

MaxBinSize int // maximum number of peers in a row before pruning

RetryInterval int64 // initial interval before a peer is first redialed

RetryExponent int // exponent to multiply retry intervals with

MaxRetries int // maximum number of redial attempts

// function to sanction or prevent suggesting a peer

Reachable func(*BzzAddr) bool `json:"-"`

Capabilities *capability.Capabilities `json:"-"`

}

KadParams holds the config params for Kademlia

func NewKadParams ¶

func NewKadParams() *KadParams

NewKadParams returns a params struct with default values

type Kademlia ¶

type Kademlia struct {

*KadParams // Kademlia configuration parameters

// contains filtered or unexported fields

}

Kademlia is a table of live peers and a db of known peers (node records)

func NewKademlia ¶

NewKademlia creates a Kademlia table for base address addr with parameters as in params if params is nil, it uses default values

func (*Kademlia) EachAddr ¶

EachAddr called with (base, po, f) is an iterator applying f to each known peer that has proximity order o or less as measured from the base if base is nil, kademlia base address is used

func (*Kademlia) EachAddrFiltered ¶ added in v0.5.0

func (k *Kademlia) EachAddrFiltered(base []byte, capKey string, o int, f func(*BzzAddr, int) bool) error

EachAddrFiltered performs the same action as EachAddr with the difference that it will only return peers that matches the specified capability index filter

func (*Kademlia) EachBinDesc ¶ added in v0.5.3

func (k *Kademlia) EachBinDesc(base []byte, minProximityOrder int, consumer PeerBinConsumer)

Traverse bins (PeerBin) in descending order of proximity (so closest first) with respect to a given address base. It will stop iterating whenever the supplied consumer returns false, the bins run out or a bin is found with proximity order less than minProximityOrder param.

func (*Kademlia) EachBinDescFiltered ¶ added in v0.5.3

func (k *Kademlia) EachBinDescFiltered(base []byte, capKey string, minProximityOrder int, consumer PeerBinConsumer) error

Traverse bins in descending order filtered by capabilities. Sane as EachBinDesc but taking into account only peers with those capabilities.

func (*Kademlia) EachConn ¶

EachConn is an iterator with args (base, po, f) applies f to each live peer that has proximity order po or less as measured from the base if base is nil, kademlia base address is used

func (*Kademlia) EachConnFiltered ¶ added in v0.5.0

func (k *Kademlia) EachConnFiltered(base []byte, capKey string, o int, f func(*Peer, int) bool) error

EachConnFiltered performs the same action as EachConn with the difference that it will only return peers that matches the specified capability index filter

func (*Kademlia) GetHealthInfo ¶

GetHealthInfo reports the health state of the kademlia connectivity

The PeerPot argument provides an all-knowing view of the network The resulting Health object is a result of comparisons between what is the actual composition of the kademlia in question (the receiver), and what SHOULD it have been when we take all we know about the network into consideration.

used for testing only

func (*Kademlia) IsClosestTo ¶ added in v0.5.0

IsClosestTo returns true if self is the closest peer to addr among filtered peers ie. return false iff there is a peer that - filter(bzzpeer) == true AND - pot.DistanceCmp(addr, peeraddress, selfaddress) == 1

func (*Kademlia) IsWithinDepth ¶ added in v0.5.3

IsWithinDepth checks whether a given address falls within this node's saturation depth

func (*Kademlia) KademliaInfo ¶ added in v0.4.3

func (k *Kademlia) KademliaInfo() KademliaInfo

func (*Kademlia) NeighbourhoodDepth ¶

NeighbourhoodDepth returns the value calculated by depthForPot function in setNeighbourhoodDepth method.

func (*Kademlia) NeighbourhoodDepthCapability ¶ added in v0.5.0

func (*Kademlia) Register ¶

Register enters each address as kademlia peer record into the database of known peer addresses

func (*Kademlia) RegisterCapabilityIndex ¶ added in v0.5.0

func (k *Kademlia) RegisterCapabilityIndex(s string, c capability.Capability) error

RegisterCapabilityIndex adds an entry to the capability index of the kademlia The capability index is associated with the supplied string s Any peers matching any bits set in the capability in the index, will be added to the index (or removed on removal)

func (*Kademlia) Saturation ¶

Saturation returns the smallest po value in which the node has less than MinBinSize peers if the iterator reaches neighbourhood radius, then the last bin + 1 is returned

func (*Kademlia) SubscribeToNeighbourhoodDepthChange ¶

func (k *Kademlia) SubscribeToNeighbourhoodDepthChange() (c <-chan struct{}, unsubscribe func())

SubscribeToNeighbourhoodDepthChange returns the channel that signals when neighbourhood depth value is changed. The current neighbourhood depth is returned by NeighbourhoodDepth method. Returned function unsubscribes the channel from signaling and releases the resources. Returned function is safe to be called multiple times.

func (*Kademlia) SubscribeToPeerChanges ¶ added in v0.5.3

func (k *Kademlia) SubscribeToPeerChanges() *pubsubchannel.Subscription

SubscribeToPeerChanges returns the channel that signals when a new Peer is added or removed from the table. Returned function unsubscribes the channel from signaling and releases the resources. Returned function is safe to be called multiple times.

type KademliaBackend ¶ added in v0.5.3

type KademliaBackend interface {

SubscribeToPeerChanges() *pubsubchannel.Subscription

BaseAddr() []byte

EachBinDesc(base []byte, minProximityOrder int, consumer PeerBinConsumer)

EachBinDescFiltered(base []byte, capKey string, minProximityOrder int, consumer PeerBinConsumer) error

EachConn(base []byte, o int, f func(*Peer, int) bool)

}

KademliaBackend is the required interface of KademliaLoadBalancer.

type KademliaInfo ¶ added in v0.4.3

type KademliaLoadBalancer ¶ added in v0.5.3

type KademliaLoadBalancer struct {

// contains filtered or unexported fields

}

KademliaLoadBalancer tries to balance request to the peers in Kademlia returning the peers sorted by least recent used whenever several will be returned with the same po to a particular address. The user of KademliaLoadBalancer should signal if the returned element (LBPeer) has been used with the function lbPeer.AddUseCount()

func NewKademliaLoadBalancer ¶ added in v0.5.3

func NewKademliaLoadBalancer(kademlia KademliaBackend, useNearestNeighbourInit bool) *KademliaLoadBalancer

Creates a new KademliaLoadBalancer from a KademliaBackend. If useNearestNeighbourInit is true the nearest neighbour peer use count will be used when a peer is initialized. If not, least used peer use count in same bin as new peer will be used. It is not clear which one is better, when this load balancer would be used in several use cases we could do take some decision.

func (*KademliaLoadBalancer) EachBinDesc ¶ added in v0.5.3

func (klb *KademliaLoadBalancer) EachBinDesc(base []byte, consumeBin LBBinConsumer)

EachBinDesc returns all bins in descending order from the perspective of base address. All peers in that bin will be provided to the LBBinConsumer sorted by least used first.

func (*KademliaLoadBalancer) EachBinFiltered ¶ added in v0.5.3

func (klb *KademliaLoadBalancer) EachBinFiltered(base []byte, capKey string, consumeBin LBBinConsumer) error

EachBinFiltered returns all bins in descending order from the perspective of base address. Only peers with the provided capabilities capKey are considered. All peers in that bin will be provided to the LBBinConsumer sorted by least used first.

func (*KademliaLoadBalancer) EachBinNodeAddress ¶ added in v0.5.3

func (klb *KademliaLoadBalancer) EachBinNodeAddress(consumeBin LBBinConsumer)

EachBinNodeAddress calls EachBinDesc with the base address of kademlia (the node address)

func (*KademliaLoadBalancer) Stop ¶ added in v0.5.3

func (klb *KademliaLoadBalancer) Stop()

Stop unsubscribe from notifiers

type LBBinConsumer ¶ added in v0.5.3

LBBinConsumer will be provided with a list of LBPeer's in LB criteria ordering (currently in least used ordering). Should return true if it must continue iterating LBBin's or stops if false.

type LBPeer ¶ added in v0.5.3

type LBPeer struct {

Peer *Peer

// contains filtered or unexported fields

}

An LBPeer represents a peer with a AddUseCount() function to signal that the peer has been used in order to account it for LB sorting criteria.

func (*LBPeer) AddUseCount ¶ added in v0.5.3

func (lbPeer *LBPeer) AddUseCount()

AddUseCount is called to account a use for these peer. Should be called if the peer is actually used.

type Peer ¶

type Peer struct {

*BzzPeer

// contains filtered or unexported fields

}

Peer wraps BzzPeer and embeds Kademlia overlay connectivity driver

func (*Peer) Key ¶ added in v0.5.3

Key returns a string representation of this peer to be used in maps.

func (*Peer) Label ¶ added in v0.5.3

Label returns a short string representation for debugging purposes

func (*Peer) NotifyDepth ¶

NotifyDepth sends a subPeers Msg to the receiver notifying them about a change in the depth of saturation

func (*Peer) NotifyPeer ¶

NotifyPeer notifies the remote node (recipient) about a peer if the peer's PO is within the recipients advertised depth OR the peer is closer to the recipient than self unless already notified during the connection session

type PeerBin ¶ added in v0.5.3

type PeerBin struct {

ProximityOrder int

Size int

PeerIterator PeerIterator

}

PeerBin represents a bin in the Kademlia table. Contains a PeerIterator to traverse the peer entries inside it.

type PeerBinConsumer ¶ added in v0.5.3

PeerBinConsumer consumes a peerBin. It should return true if it wishes to continue iterating bins.

type PeerConsumer ¶ added in v0.5.3

type PeerConsumer func(entry *entry) bool

PeerConsumer consumes a peer entry in a PeerIterator. The function should return true if it wishes to continue iterating.

type PeerIterator ¶ added in v0.5.3

type PeerIterator func(PeerConsumer) bool

PeerIterator receives a PeerConsumer and iterates over peer entry until some of the executions of PeerConsumer returns false or the entries run out. It returns the last value returned by the last PeerConsumer execution.

Source Files

¶

Source Files

¶

Directories

¶

Directories

¶

| Path | Synopsis |

|---|---|

|

You can run this simulation using go run ./swarm/network/simulations/overlay.go

|

You can run this simulation using go run ./swarm/network/simulations/overlay.go |

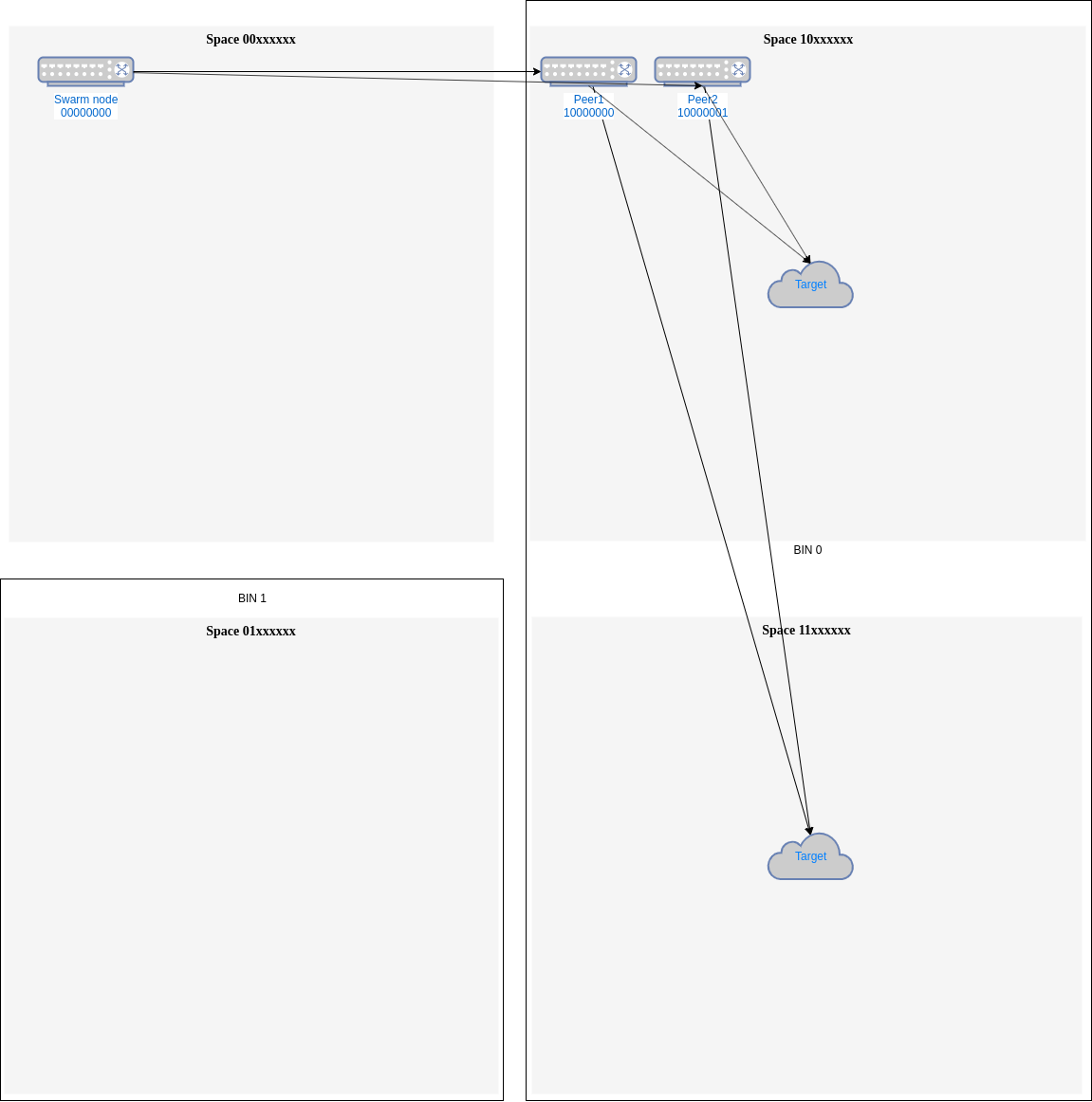

Fig.1 - Closer peers needs an external Load Balancing mechanism

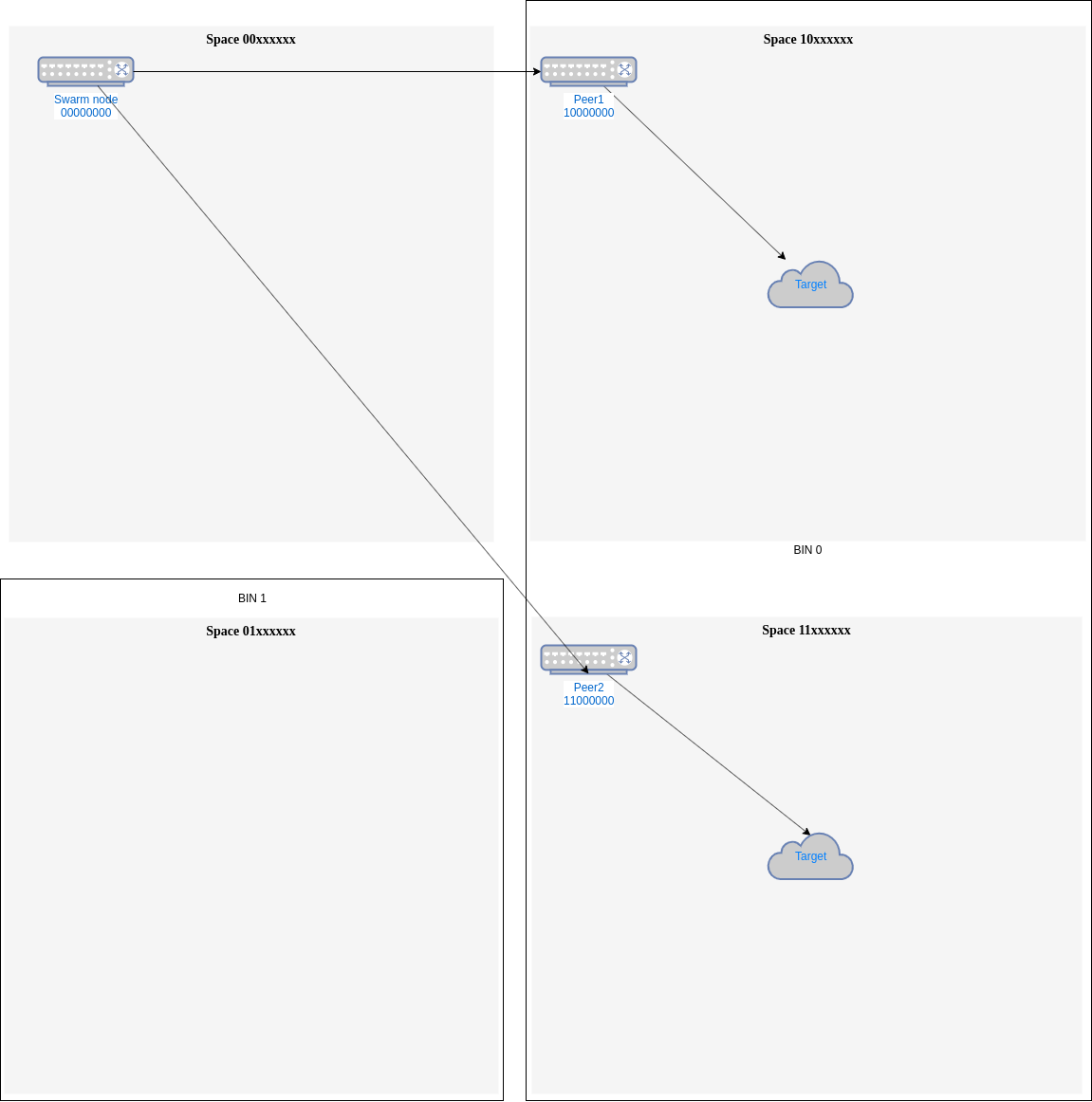

Fig.1 - Closer peers needs an external Load Balancing mechanism Fig.2 - Peers chosen by space address gap have a natural load balancing

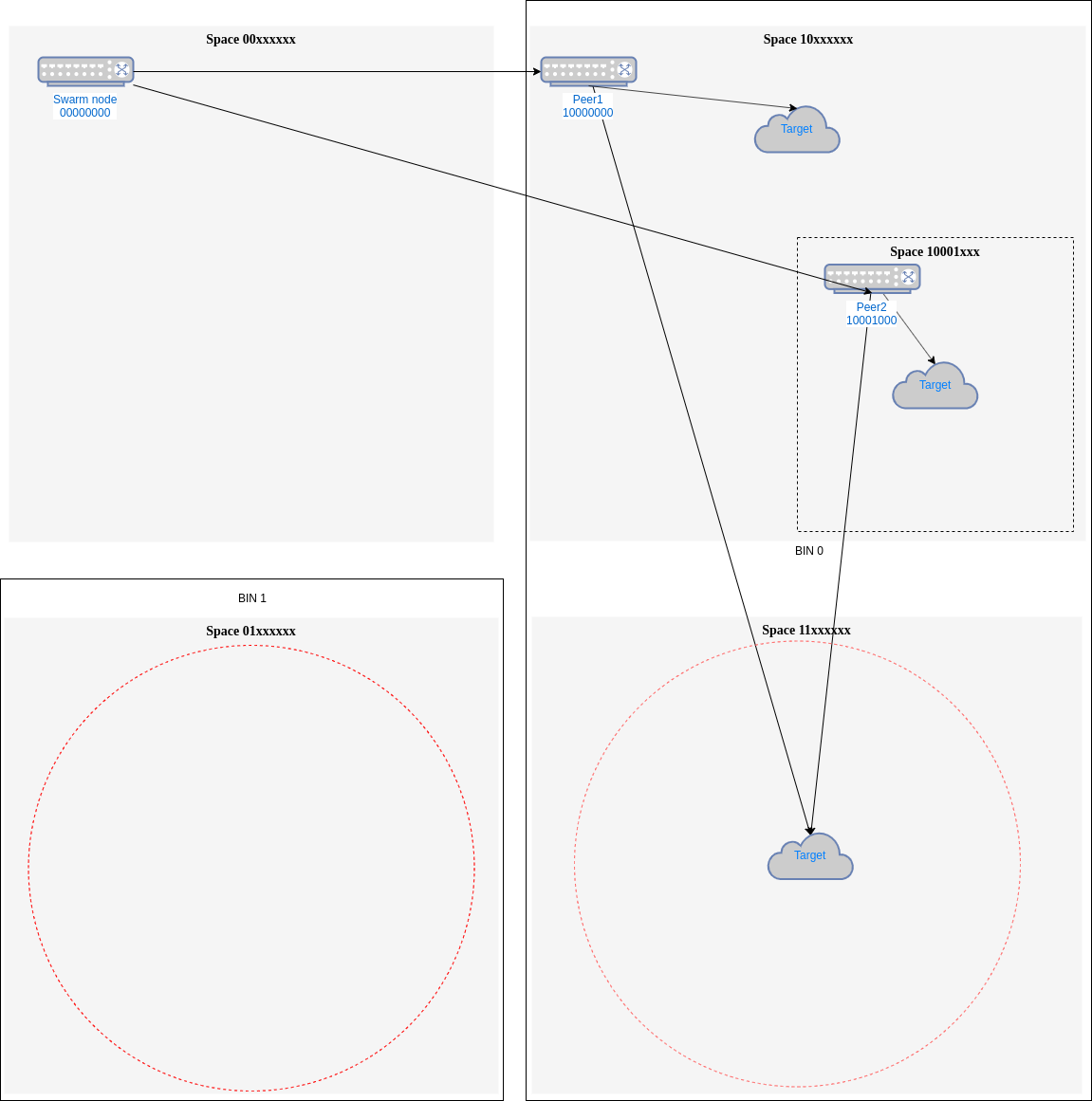

Fig.2 - Peers chosen by space address gap have a natural load balancing Fig. 3 - Comparing gaps temperature

Fig. 3 - Comparing gaps temperature