CB-Tumblebug (Multi-Cloud Infra Management) 👋

CB-TB? ✨

CB-Tumblebug (CB-TB for short) is a system for managing multi-cloud infrastructure consisting of resources from multiple cloud service providers. (Cloud-Barista)

Note: Ongoing Development of CB-Tumblebug

CB-TB has not reached version 1.0 yet. We welcome any new suggestions, issues, opinions, and contributors!

Please note that the functionalities of Cloud-Barista are not yet stable or secure.

Be cautious if you plan to use the current release in a production environment.

If you encounter any difficulties using Cloud-Barista,

please let us know by opening an issue or joining the Cloud-Barista Slack.

Note: Localization and Globalization of CB-Tumblebug

As an open-source project initiated by Korean members,

we aim to encourage participation from Korean contributors during the initial stages of this project.

Therefore, the CB-TB repository will accept the use of the Korean language in its early stages.

However, we hope this project will thrive regardless of contributors' countries in the long run.

To facilitate this, the maintainers recommend using English at least for

the titles of Issues, Pull Requests, and Commits, while accommodating local languages in the contents.

Popular Use Case 🌟

- Deploy a Multi-Cloud Infra with GPUs and Enjoy muiltple LLMs in parallel (YouTube)

- LLM-related scripts

Index 🔗

- Prerequisites

- How to Run

- How to Use

- How to Build

- How to Contribute

Prerequisites 🌍

Envionment

- Linux (recommend:

Ubuntu 22.04)

- Docker and Docker Compose

- Golang (recommend:

v1.23.0) to build the source

Dependency

Open source packages used in this project

How to Run 🚀

(1) Download CB-Tumblebug

-

Clone the CB-Tumblebug repository:

git clone https://github.com/cloud-barista/cb-tumblebug.git $HOME/go/src/github.com/cloud-barista/cb-tumblebug

cd ~/go/src/github.com/cloud-barista/cb-tumblebug

Optionally, you can register aliases for the CB-Tumblebug directory to simplify navigation:

echo "alias cdtb='cd $HOME/go/src/github.com/cloud-barista/cb-tumblebug'" >> ~/.bashrc

echo "alias cdtbsrc='cd $HOME/go/src/github.com/cloud-barista/cb-tumblebug/src'" >> ~/.bashrc

echo "alias cdtbtest='cd $HOME/go/src/github.com/cloud-barista/cb-tumblebug/src/testclient/scripts'" >> ~/.bashrc

source ~/.bashrc

-

Check Docker Compose Installation:

Ensure that Docker Engine and Docker Compose are installed on your system.

If not, you can use the following script to install them (note: this script is not intended for production environments):

cd ~/go/src/github.com/cloud-barista/cb-tumblebug

./scripts/installDocker.sh

-

Start All Components Using Docker Compose:

To run all components, use the following command:

cd ~/go/src/github.com/cloud-barista/cb-tumblebug

sudo docker compose up

This command will start all components as defined in the preconfigured docker-compose.yaml file. For configuration customization, please refer to the guide.

The following components will be started:

- ETCD: CB-Tumblebug KeyValue DB

- CB-Spider: a Cloud API controller

- CB-MapUI: a simple Map-based GUI web server

- CB-Tumblebug: the system with API server

After running the command, you should see output similar to the following:

Now, the CB-Tumblebug API server is accessible at: http://localhost:1323/tumblebug/api

Additionally, CB-MapUI is accessible at: http://localhost:1324

Note: Before using CB-Tumblebug, you need to initialize it.

To provisioning multi-cloud infrastructures with CB-TB, it is necessary to register the connection information (credentials) for clouds, as well as commonly used images and specifications.

-

Create credentials.yaml file and input your cloud credentials

-

Overview

credentials.yaml is a file that includes multiple credentials to use API of Clouds supported by CB-TB (AWS, GCP, AZURE, ALIBABA, etc.)- It should be located in the

~/.cloud-barista/ directory and securely managed.

- Refer to the

template.credentials.yaml for the template

-

Create credentials.yaml the file

Automatically generate the credentials.yaml file in the ~/.cloud-barista/ directory using the CB-TB script

cd ~/go/src/github.com/cloud-barista/cb-tumblebug

./init/genCredential.sh

-

Input credential data

Put credential data to ~/.cloud-barista/credentials.yaml (Reference: How to obtain a credential for each CSP)

### Cloud credentials for credential holders (default: admin)

credentialholder:

admin:

alibaba:

# ClientId(ClientId): client ID of the EIAM application

# Example: app_mkv7rgt4d7i4u7zqtzev2mxxxx

ClientId:

# ClientSecret(ClientSecret): client secret of the EIAM application

# Example: CSEHDcHcrUKHw1CuxkJEHPveWRXBGqVqRsxxxx

ClientSecret:

aws:

# ClientId(aws_access_key_id)

# ex: AKIASSSSSSSSSSS56DJH

ClientId:

# ClientSecret(aws_secret_access_key)

# ex: jrcy9y0Psejjfeosifj3/yxYcgadklwihjdljMIQ0

ClientSecret:

...

-

Encrypt credentials.yaml into credentials.yaml.enc

To protect sensitive information, credentials.yaml is not used directly. Instead, it must be encrypted using encCredential.sh. The encrypted file credentials.yaml.enc is then used by init.py. This approach ensures that sensitive credentials are not stored in plain text.

- Encrypting Credentials

init/encCredential.sh

If you need to update your credentials, decrypt the encrypted file using decCredential.sh, make the necessary changes to credentials.yaml, and then re-encrypt it.

- Decrypting Credentials

init/decCredential.sh

-

(INIT) Register all multi-cloud connection information and common resources

-

How to register

Refer to README.md for init.py, and execute the init.py script. (enter 'y' for confirmation prompts)

cd ~/go/src/github.com/cloud-barista/cb-tumblebug

./init/init.sh

-

The credentials in ~/.cloud-barista/credentials.yaml.enc (encrypted file from the credentials.yaml) will be automatically registered (all CSP and region information recorded in cloudinfo.yaml will be automatically registered in the system)

- Note: You can check the latest regions and zones of CSP using

update-cloudinfo.py and review the file for updates. (contributions to updates are welcome)

-

Common images and specifications recorded in the cloudimage.csv and cloudspec.csv files in the assets directory will be automatically registered.

-

init.py will apply the hybrid encryption for secure transmission of credentials

- Retrieve RSA Public Key: Use the

/credential/publicKey API to get the public key.

- Encrypt Credentials: Encrypt credentials with a randomly generated

AES key, then encrypt the AES key with the RSA public key.

- Transmit Encrypted Data: Send

the encrypted credentials and AES key to the server. The server decrypts the AES key and uses it to decrypt the credentials.

This method ensures your credentials are securely transmitted and protected during registration. See init.py for a Python implementation.

In detail, check out Secure Credential Registration Guide (How to use the credential APIs)

(4) Shutting down and Version Upgrade

-

Shutting down CB-TB and related components

-

Stop all containers by ctrl + c or type the command sudo docker compose stop / sudo docker compose down

(When a shutdown event occurs to CB-TB, the system will be shutting down gracefully: API requests that can be processed within 10 seconds will be completed)

-

In case of cleanup is needed due to internal system errors

-

Upgrading the CB-TB & CB-Spider versions

The following cleanup steps are unnecessary if you clearly understand the impact of the upgrade

How to Use CB-TB Features 🌟

- Using CB-TB MapUI (recommended)

- Using CB-TB REST API (recommended)

- Using CB-TB Test Scripts

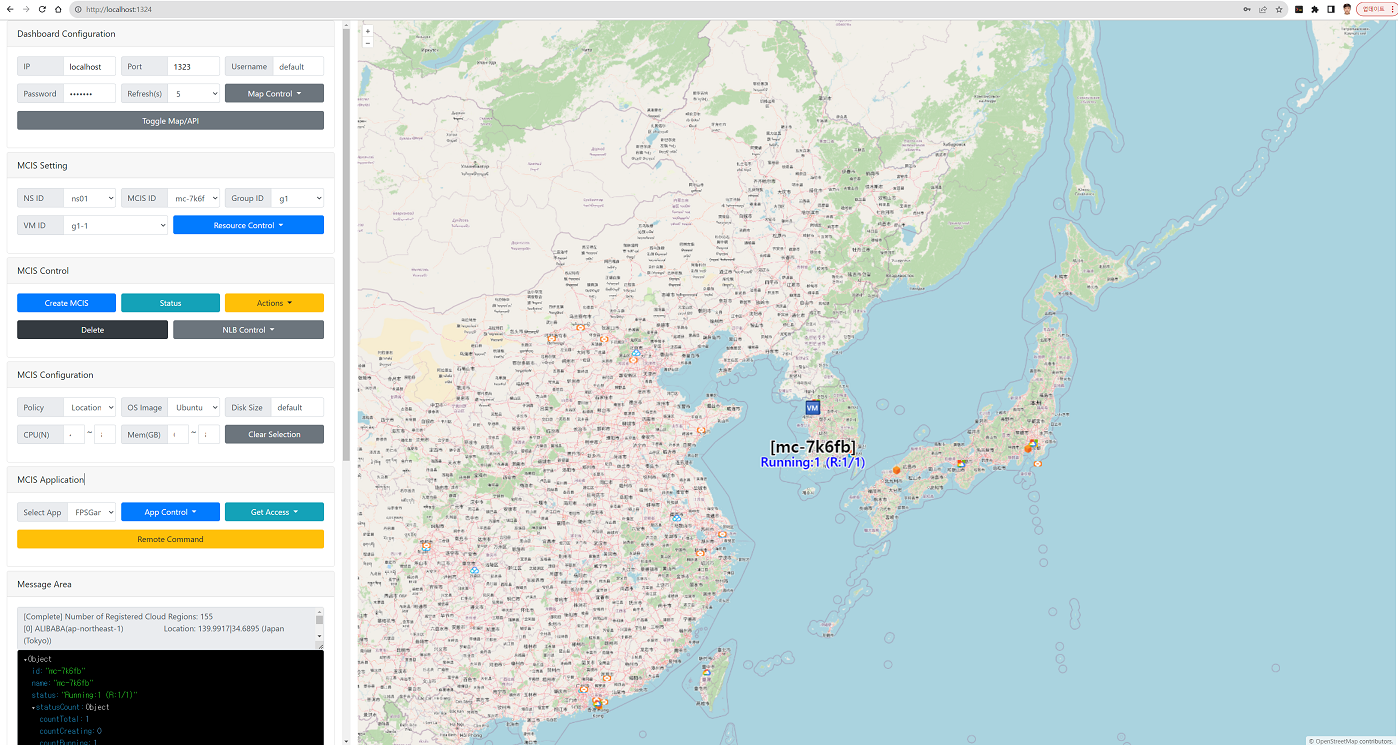

Using CB-TB MapUI

- With CB-MapUI, you can create, view, and control Mutli-Cloud infra.

- CB-MapUI is a project to visualize the deployment of MCI in a map GUI.

- CB-MapUI also run with CB-Tumblebug by default (edit

dockercompose.yaml to disable)

- Access via web browser at http://{HostIP}:1324

Using CB-TB REST API

Using CB-TB Scripts

src/testclient/scripts/ provides Bash shell-based scripts that simplify and automate the MCI (MC-Infra) provisioning procedures, which require complex steps.

[Note] Details

Setup Test Environment

- Go to

src/testclient/scripts/

- Configure

conf.env

- Provide basic test information such as CB-Spider and CB-TB server endpoints, cloud regions, test image names, test spec names, etc.

- Much information for various cloud types has already been investigated and input, so it can be used without modification. (However, check for charges based on the specified spec)

- Configure

testSet.env

- Set the cloud and region configurations to be used for MCI provisioning in a file (you can change the existing

testSet.env or copy and use it)

- Specify the types of CSPs to combine

- Change the number in NumCSP= to specify the total number of CSPs to combine

- Specify the types of CSPs to combine by rearranging the lines in L15-L24 (use up to the number specified in NumCSP)

- Example: To combine aws and alibaba, change NumCSP=2 and rearrange

IndexAWS=$((++IX)), IndexAlibaba=$((++IX))

- Specify the regions of the CSPs to combine

- Go to each CSP setting item

# AWS (Total: 21 Regions)

- Specify the number of regions to configure in

NumRegion[$IndexAWS]=2 (in the example, it is set to 2)

- Set the desired regions by rearranging the lines of the region list (if

NumRegion[$IndexAWS]=2, the top 2 listed regions will be selected)

- Be aware!

- Be aware that creating VMs on public CSPs such as AWS, GCP, Azure, etc. may incur charges.

- With the default setting of

testSet.env, TestClouds (TestCloud01, TestCloud02, TestCloud03) will be used to create mock VMs.

TestCloud01, TestCloud02, TestCloud03 are not real CSPs. They are used for testing purposes (do not support SSH into VM).- Anyway, please be aware of cloud usage costs when using public CSPs.

Integrated Tests

-

You can test the entire process at once by executing create-all.sh and clean-all.sh included in src/testclient/scripts/sequentialFullTest/

└── sequentialFullTest # Automatic testing from cloud information registration to NS creation, Resource creation, and MCI creation

├── check-test-config.sh # Check the multi-cloud infrastructure configuration specified in the current testSet

├── create-all.sh # Automatic testing from cloud information registration to NS creation, Resource creation, and MCI creation

├── gen-sshKey.sh # Generate SSH key files to access MCI

├── command-mci.sh # Execute remote commands on the created MCI (multiple VMs)

├── deploy-nginx-mci.sh # Automatically deploy Nginx on the created MCI (multiple VMs)

├── create-mci-for-df.sh # Create MCI for hosting CB-Dragonfly

├── deploy-dragonfly-docker.sh # Automatically deploy CB-Dragonfly on MCI and set up the environment

├── clean-all.sh # Delete all objects in reverse order of creation

├── create-k8scluster-only.sh # Create a K8s cluster for the multi-cloud infrastructure specified in the testSet

├── get-k8scluster.sh # Get K8s cluster information for the multi-cloud infrastructure specified in the testSet

├── clean-k8scluster-only.sh # Delete the K8s cluster for the multi-cloud infrastructure specified in the testSet

├── force-clean-k8scluster-only.sh # Force delete the K8s cluster for the multi-cloud infrastructure specified in the testSet if deletion fails

├── add-k8snodegroup.sh # Add a new K8s node group to the created K8s cluster

├── remove-k8snodegroup.sh # Delete the newly created K8s node group in the K8s cluster

├── set-k8snodegroup-autoscaling.sh # Change the autoscaling setting of the created K8s node group to off

├── change-k8snodegroup-autoscalesize.sh # Change the autoscale size of the created K8s node group

├── deploy-weavescope-to-k8scluster.sh # Deploy weavescope to the created K8s cluster

└── executionStatus # Logs of the tests performed (information is added when testAll is executed and removed when cleanAll is executed. You can check the ongoing tasks)

-

MCI Creation Test

-

./create-all.sh -n shson -f ../testSetCustom.env # Create MCI with the cloud combination configured in ../testSetCustom.env

-

Automatically proceed with the process to check the MCI creation configuration specified in ../testSetCustom.env

-

Example of execution result

Table: All VMs in the MCI : cb-shson

ID Status PublicIP PrivateIP CloudType CloudRegion CreatedTime

-- ------ -------- --------- --------- ----------- -----------

aws-ap-southeast-1-0 Running xx.250.xx.73 192.168.2.180 aws ap-southeast-1 2021-09-17 14:59:30

aws-ca-central-1-0 Running x.97.xx.230 192.168.4.98 aws ca-central-1 2021-09-17 14:59:58

gcp-asia-east1-0 Running xx.229.xxx.26 192.168.3.2 gcp asia-east1 2021-09-17 14:59:42

[DATE: 17/09/2021 15:00:00] [ElapsedTime: 49s (0m:49s)] [Command: ./create-mci-only.sh all 1 shson ../testSetCustom.env 1]

[Executed Command List]

[Resource:aws-ap-southeast-1(28s)] create-resource-ns-cloud.sh (Resource) aws 1 shson ../testSetCustom.env

[Resource:aws-ca-central-1(34s)] create-resource-ns-cloud.sh (Resource) aws 2 shson ../testSetCustom.env

[Resource:gcp-asia-east1(93s)] create-resource-ns-cloud.sh (Resource) gcp 1 shson ../testSetCustom.env

[MCI:cb-shsonvm4(19s+More)] create-mci-only.sh (MCI) all 1 shson ../testSetCustom.env

[DATE: 17/09/2021 15:00:00] [ElapsedTime: 149s (2m:29s)] [Command: ./create-all.sh -n shson -f ../testSetCustom.env -x 1]

-

MCI Removal Test (Use the input parameters used in creation for deletion)

./clean-all.sh -n shson -f ../testSetCustom.env # Perform removal of created resources according to ../testSetCustom.env- Be aware!

- If you created MCI (VMs) for testing in public clouds, the VMs may incur charges.

- You need to terminate MCI by using

clean-all to avoid unexpected billing.

- Anyway, please be aware of cloud usage costs when using public CSPs.

-

Generate MCI SSH access keys and access each VM

-

./gen-sshKey.sh -n shson -f ../testSetCustom.env # Return access keys for all VMs configured in MCI

-

Example of execution result

...

[GENERATED PRIVATE KEY (PEM, PPK)]

[MCI INFO: mc-shson]

[VMIP]: 13.212.254.59 [MCIID]: mc-shson [VMID]: aws-ap-southeast-1-0

./sshkey-tmp/aws-ap-southeast-1-shson.pem

./sshkey-tmp/aws-ap-southeast-1-shson.ppk

...

[SSH COMMAND EXAMPLE]

[VMIP]: 13.212.254.59 [MCIID]: mc-shson [VMID]: aws-ap-southeast-1-0

ssh -i ./sshkey-tmp/aws-ap-southeast-1-shson.pem cb-user@13.212.254.59 -o StrictHostKeyChecking=no

...

[VMIP]: 35.182.30.37 [MCIID]: mc-shson [VMID]: aws-ca-central-1-0

ssh -i ./sshkey-tmp/aws-ca-central-1-shson.pem cb-user@35.182.30.37 -o StrictHostKeyChecking=no

-

Verify MCI via SSH remote command execution

./command-mci.sh -n shson -f ../testSetCustom.env # Execute IP and hostname retrieval for all VMs in MCI

-

K8s Cluster Test (WIP: Stability work in progress for each CSP)

./create-resource-ns-cloud.sh -n tb -f ../testSet.env` # Create Resource required for K8s cluster creation

./create-k8scluster-only.sh -n tb -f ../testSet.env -x 1 -z 1` # Create K8s cluster (-x maximum number of nodes, -z additional name for K8s node group and K8s cluster)

./get-k8scluster.sh -n tb -f ../testSet.env -z 1` # Get K8s cluster information

./add-k8snodegroup.sh -n tb -f ../testSet.env -x 1 -z 1` # Add a new K8s node group to the K8s cluster

./change-k8snodegroup-autoscalesize.sh -n tb -f ../testSet.env -x 1 -z 1` # Change the autoscale size of the specified K8s node group

./deploy-weavescope-to-k8scluster.sh -n tb -f ../testSet.env -y n` # Deploy weavescope to the created K8s cluster

./set-k8snodegroup-autoscaling.sh -n tb -f ../testSet.env -z 1` # Change the autoscaling setting of the new K8s node group to off

./remove-k8snodegroup.sh -n tb -f ../testSet.env -z 1` # Delete the newly created K8s node group

./clean-k8scluster-only.sh -n tb -f ../testSet.env -z 1` # Delete the created K8s cluster

./force-clean-k8scluster-only.sh -n tb -f ../testSet.env -z 1` # Force delete the created K8s cluster if deletion fails

./clean-resource-ns-cloud.h -n tb -f ../testSet.env` # Delete the created Resource

Multi-Cloud Infrastructure Use Cases

Deploying an MCI Xonotic (3D FPS) Game Server

Distributed Deployment of MCI Weave Scope Cluster Monitoring

Deploying MCI Jitsi Video Conferencing

Automatic Configuration of MCI Ansible Execution Environment

How to Build 🛠️

(1) Setup Prerequisites

(2) Build and Run CB-Tumblebug

(2-1) Option 1: Run CB-Tumblebug with Docker Compose (Recommended)

-

Run Docker Compose with the build option

To build the current CB-Tumblebug source code into a container image and run it along with the other containers, use the following command:

cd ~/go/src/github.com/cloud-barista/cb-tumblebug

sudo DOCKER_BUILDKIT=1 docker compose up --build

This command will automatically build the CB-Tumblebug from the local source code

and start it within a Docker container, along with any other necessary services as defined in the docker-compose.yml file. DOCKER_BUILDKIT=1 setting is used to speed up the build by using the go build cache technique.

(2-2) Option 2: Run CB-Tumblebug from the Makefile

-

Build the Golang source code using the Makefile

cd ~/go/src/github.com/cloud-barista/cb-tumblebug/src

make

All dependencies will be downloaded automatically by Go.

The initial build will take some time, but subsequent builds will be faster by the Go build cache.

Note To update the Swagger API documentation, run make swag

-

Set environment variables required to run CB-TB (in another tab)

- Check and configure the contents of

cb-tumblebug/conf/setup.env (CB-TB environment variables, modify as needed)

-

Execute the built cb-tumblebug binary by using make run

cd ~/go/src/github.com/cloud-barista/cb-tumblebug/src

make run

How to Contribute 🙏

CB-TB welcomes improvements from both new and experienced contributors!

Check out CONTRIBUTING.

Contributors ✨

Thanks goes to these wonderful people (emoji key):

License

Directories

¶

Directories

¶